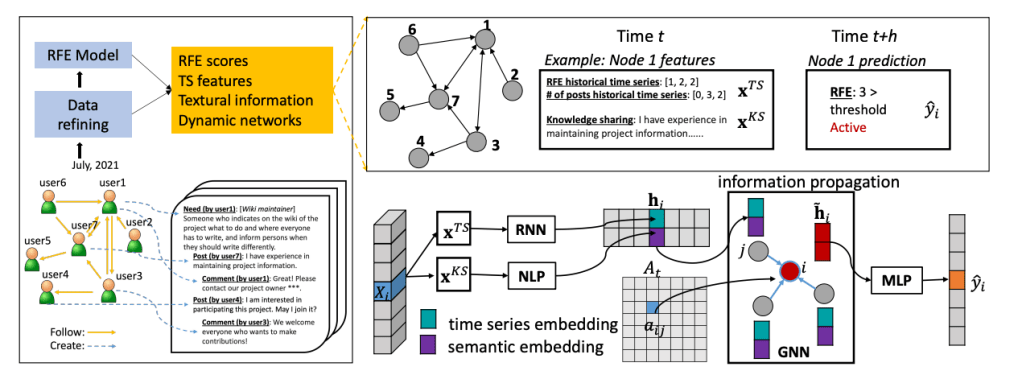

As online platforms increasingly rely on voluntary contributions—from open science to collaborative innovation—the ability to anticipate user engagement becomes both a scientific and practical priority. Yet predicting who will stay active, who will disengage, and why, remains a complex challenge. Our recent paper, KEGNN: Knowledge-Enhanced Graph Neural Networks for User Engagement Prediction (Fan et al., International Conference on Multimedia Retrieval 2025), introduces a novel framework that addresses this gap by integrating behavioral, social, and semantic signals into a unified predictive model.

Continue reading